- Prof. Dancy's Site - Course Site -

Learning Rule-Based Expert Systems Using Python ACT-R

Paired Programming Optional

Due - 11-Sep-2018 11:55pm

Due by Moodle (see bottom for instructions)

For this assignment, we are going to use Python ACT-R

- Rule-Based Expert Systems allow you to create intelligent systems using a series of If->Then style production rules

For this assignment, we are going to use the Python ACT-R Cognitive Architecture to build our Rule-Based Expert Systems

-

Why ACT-R?

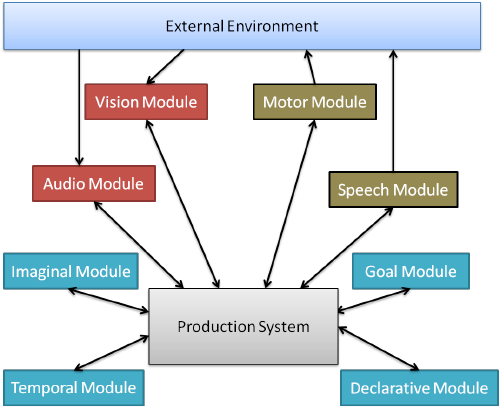

- Though ACT-R has many components that can make it a very useful system for representing intelligent behavior (especially behavior that is human-like), we will be using it for it's central production system, which uses rules to define the behavior of it's central executive system (the "Production System" in Figure 1).

Figure 1: A high-level view of ACT-R, from (Dancy et al., 2015)- We will use ACT-R as a way to give you an opportunity to use a full system to create rule-based agents and as a way to give you an experience with a well studied Cognitive Architecture

- By using Python ACT-R (instead of the canonical LISP version) you also get to use a more modern language to put your agents together.

Setting up your environment

For this assignment, (and generally the assignments that involve a programming component) you will need Python 3 (particularly 3.6)

I recommend that you use a virtual environment (or something similar) if you aren't already - for example, https://docs.python.org/3.6/tutorial/venv.html

This will hopefully help keep you from having to diagnose odd issues that come from quickly installing and uninstalling different software over the years.

Getting Python ACT-R

For this we will use a custom version of Python ACT-R that acts more nicely with Python3 than the versions listed on the CCM Lab website

- Go to - https://gitlab.bucknell.edu/AI-CogSci-Group/Py3ACTR.git to download the code

- Once you have the code downloaded, navigate into the top directory of downloaded files via terminal and activate whatever virtual environment that you are using

- install Python ACT-R by running

python3 setup.py install

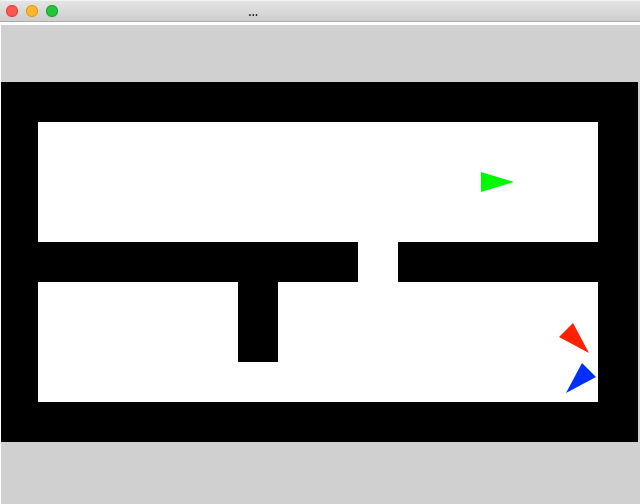

Ensure everything is working by navigating to tutorials/Grid World and running python3 3_many_agents.py

If all works out, you should see a grid environment with agents moving around (similar to the figure below)

Your first Python ACT-R agent

Let's create our first Python ACT-R Agent

Create a new file called RBES1.py

The first thing you'll need in this file is the important statements to use the CCM/Python ACT-R library

# import ccm module library for Python ACT-R classes

import ccm

from ccm.lib.actr import *

Now let's create the actual agent

class SandwichBuilder(ACTR):

goal = Buffer()

sandwich = []

def init():

goal.set("build_sandwich 1 prep")

def prep_ingredients(goal="build_sandwich 1 prep"):

#start building our sandwich!

goal.set("build_sandwich 2 bottom_bread")

def place_bottom_bread(goal="build_sandwich 2 ?ingred"):

#Place the bottom piece of bread

sandwich.append(ingred):

goal.set("build_sandwich 3 mayo")

def place_mayo(goal="build_sandwich 3 ?ingred"):

sandwich.append(ingred)

goal.set("build_sandwich 4 turkey")

def place_turkey(goal="build_sandwich 4 ?meat"):

def place_cheese(goal="build_sandwich 5 ?cheese"):

def forgot_mayo(goal="build_sandwich 6 top_bread"):

def place_mayo_top(goal="build_sandwich 6 mayo"):

def place_top_bread(goal="build_sandwich 7 top_bread"):

self.stop

As a first try at creating your agent, try and finish the rules there so that the agent completes the sandwich

Be sure to include the code below, so that you have an empty environment to run the agent and a log that prints out

class EmptyEnvironment(ccm.Model):

pass

env_name = EmptyEnvironment()

agent_name = SandwichBuilder()

env_name.agent = agent_name

ccm.log_everything(env_name)

env_name.run()

When you're finished, you may (should) have something similar to this in your terminal:

0.000 agent.production_match_delay 0

0.000 agent.production_threshold None

0.000 agent.production_time 0.05

0.000 agent.production_time_sd None

0.000 agent.goal.chunk None

0.000 agent.goal.chunk build_sandwich 1 prep

0.000 agent.production prep_ingredients

0.050 agent.production None

0.050 agent.goal.chunk build_sandwich 2 bottom_bread

0.050 agent.production place_bottom_bread

0.100 agent.production None

0.100 agent.goal.chunk build_sandwich 3 mayo

0.100 agent.production place_mayo

0.150 agent.production None

0.150 agent.goal.chunk build_sandwich 4 turkey

0.150 agent.production place_turkey

0.200 agent.production None

0.200 agent.goal.chunk build_sandwich 5 provolone

0.200 agent.production place_cheese

0.250 agent.production None

0.250 agent.goal.chunk build_sandwich 6 mayo

0.250 agent.production place_mayo_top

0.300 agent.production None

0.300 agent.goal.chunk build_sandwich 7 top_bread

0.300 agent.production place_top_bread

0.350 agent.production None

Sandwich complete!

A Python ACT-R Rule agent in a simple grid environment

We need to create a simple agent that can traverse the board as needed Now the more fun stuff. Let's create an agent to move around in a grid environment similar to the one you saw in a previous example

Below is a starter code for you to create a rule-based roomba-like agent

The idea is to implement the strategies reported to be used by some Roombas (broadly defined) to make your agent move around in the environment and clean dirty spots

These implementation details can be found a few places - https://www.cnet.com/news/appliance-science-how-robotic-vacuums-navigate/ - seems to describe it fairly well.

import ccm

log=ccm.log()

from ccm.lib import grid

from ccm.lib.actr import *

import random

mymap="""

################

# C#

# D #

# #

## ##

# DD #

# D D#

# DD#

################

"""

class MyCell(grid.Cell):

dirty=False

chargingsquare=False

def color(self):

if self.chargingsquare: return "green"

elif self.dirty: return 'brown'

elif self.wall: return 'black'

else: return 'white'

def load(self,char):

if char=='#': self.wall=True

elif char=='D': self.dirty=True

elif char=='C': self.chargingsquare=True

class MotorModule(ccm.Model):

FORWARD_TIME = .1

TURN_TIME = 0.025

CLEAN_TIME = 0.025

def __init__(self):

ccm.Model.__init__(self)

self.busy=False

def turn_left(self, amount=1):

if self.busy: return

self.busy=True

self.action="turning left"

yield MotorModule.TURN_TIME

amount *= -1

self.parent.body.turn(amount)

self.busy=False

def turn_right(self, amount=1):

if self.busy: return

self.busy=True

self.action="turning left"

yield MotorModule.TURN_TIME

self.parent.body.turn(amount)

self.busy=False

def turn_around(self):

if self.busy: return

self.busy=True

self.action="turning around"

yield MotorModule.TURN_TIME

self.parent.body.turn_around()

self.busy=False

def go_forward(self, dist=1):

if self.busy: return

self.busy=True

self.action="going forward"

for i in range(dist):

yield MotorModule.FORWARD_TIME

self.parent.body.go_forward()

self.action=None

self.busy=False

def go_left(self,dist=1):

if self.busy: return

self.busy="True"

self.action='turning left'

yield MotorModule.TURN_TIME

self.parent.body.turn_left()

self.action="going forward"

for i in range(dist):

yield MotorModule.FORWARD_TIME

self.parent.body.go_forward()

self.action=None

self.busy=False

def go_right(self):

if self.busy: return

self.busy=True

self.action='turning right'

yield 0.1

self.parent.body.turn_right()

self.action='going forward'

yield MotorModule.FORWARD_TIME

self.parent.body.go_forward()

self.action=None

self.busy=False

def go_towards(self,x,y):

if self.busy: return

self.busy=True

self.clean_if_dirty()

self.action='going towards %s %s'%(x,y)

yield MotorModule.FORWARD_TIME

self.parent.body.go_towards(x,y)

self.action=None

self.busy=False

def clean_if_dirty(self):

"Clean cell if dirty"

if (self.parent.body.cell.dirty):

self.action="cleaning cell"

self.clean()

def clean(self):

yield MotorModule.CLEAN_TIME

self.parent.body.cell.dirty=False

class ObstacleModule(ccm.ProductionSystem):

production_time=0

def init():

self.ahead=body.ahead_cell.wall

self.left=body.left90_cell.wall

self.right=body.right90_cell.wall

self.left45=body.left_cell.wall

self.right45=body.right_cell.wall

def check_ahead(self='ahead:False',body='ahead_cell.wall:True'):

self.ahead=True

def check_left(self='left:False',body='left90_cell.wall:True'):

self.left=True

def check_left45(self='left45:False',body='left_cell.wall:True'):

self.left45=True

def check_right(self='right:False',body='right90_cell.wall:True'):

self.right=True

def check_right45(self='right45:False',body='right_cell.wall:True'):

self.right45=True

def check_ahead2(self='ahead:True',body='ahead_cell.wall:False'):

self.ahead=False

def check_left2(self='left:True',body='left90_cell.wall:False'):

self.left=False

def check_left452(self='left45:True',body='left_cell.wall:False'):

self.left45=False

def check_right2(self='right:True',body='right90_cell.wall:False'):

self.right=False

def check_right452(self='right45:True',body='right_cell.wall:False'):

self.right45=False

class CleanSensorModule(ccm.ProductionSystem):

production_time = 0

dirty=False

def found_dirty(self="dirty:False", body="cell.dirty:True"):

self.dirty=True

def found_clean(self="dirty:True", body="cell.dirty:False"):

self.dirty=False

class VacuumAgent(ACTR):

goal = Buffer()

body = grid.Body()

motorInst = MotorModule()

cleanSensor = CleanSensorModule()

def init():

goal.set("rsearch left 1 0 1")

self.home = None

#----ROOMBA----#

def clean_cell(cleanSensor="dirty:True", motorInst="busy:False", utility=0.6):

motorInst.clean()

def forward_rsearch(goal="rsearch left ?dist ?num_turns ?curr_dist",

motorInst="busy:False", body="ahead_cell.wall:False"):

motorInst.go_forward()

print(body.ahead_cell.wall)

curr_dist = str(int(curr_dist) - 1)

goal.set("rsearch left ?dist ?num_turns ?curr_dist")

def left_rsearch(goal="rsearch left ?dist ?num_turns 0", motorInst="busy:False",

utility=0.1):

motorInst.turn_left(2)

num_turns = str(int(num_turns) + 1)

goal.set("rsearch left ?dist ?num_turns ?dist")

###Other stuff!

world=grid.World(MyCell,map=mymap)

agent=VacuumAgent()

agent.home=()

world.add(agent,5,5,dir=0,color="black")

ccm.log_everything(agent)

ccm.display(world)

world.run()

Note that for the rules above, you are free to modify, they are included to provide an example possibility.

What's going on above?

For starters we define rules within a class that represents our agent. This class must have ACTR as its parent class.

For now don't worry about the code above it that inherits from other classes (e.g., the MotorModule) these are abstractions that allow us to interact with the architecture in a principled way. For you, just know that they are an interface that is available for some small interaction with the environment.

Also notice the init() function: this is how we can essentially initialize our agent w/in ACT-R. It basically operates like a constructor.

Within this function, we set our initial goal (what we initially have in short-term memory when the simulation begins) and can set the value of any slots, which are represented as class instance attributes.

The goal buffer is one of the things we will use for keeping track of our current context. It provides a representation of short-term memory. (You may recall that STM is an important component of rule-based expert systems in general...in ACT-R this idea applies as well)

class VacuumAgent(ACTR):

goal = Buffer()

body = grid.Body()

motorInst = MotorModule()

cleanSensor = CleanSensorModule()

def init():

goal.set("rsearch left 1 0 1")

self.home = None

What about rules?

Rules are built within the class as functions (or methods if you prefer...but you'll notice in the snippet below that we don't really use a self as we would with a normal Python class method)

The argument list for a Rule (Function) provides the condition (LHS, if, etc.) and the body provides the action (RHS, then, etc.)

Within our argument list, we will generally specify the state of a buffer or module. It's not important to know much about these constructs, just that they are a way for us to encapsulate behavior and functionality in a separate entity.

def clean_cell(cleanSensor="dirty:True", motorInst="busy:False", utility=0.6):

motorInst.clean()

def forward_rsearch(goal="rsearch left ?dist ?num_turns ?curr_dist",

motorInst="busy:False", body="ahead_cell.wall:False"):

motorInst.go_forward()

curr_dist = str(int(curr_dist) - 1)

goal.set("rsearch left ?dist ?num_turns ?curr_dist")

def left_rsearch(goal="rsearch left ?dist ?num_turns 0", motorInst="busy:False",

utility=0.1):

motorInst.turn_left(2)

num_turns = str(int(num_turns) + 1)

goal.set("rsearch left ?dist ?num_turns ?dist")

Lets look at the forward_rsearch function a bit closer

def forward_rsearch(goal="rsearch left ?dist ?num_turns ?curr_dist",

motorInst="busy:False", body="ahead_cell.wall:False"):

motorInst.go_forward()

curr_dist = str(int(curr_dist) - 1)

goal.set("rsearch left ?dist ?num_turns ?curr_dist")

For our condition, we are testing whether the buffer goal (again, a representation of short-term memory) have slot values of "rsearch" and "left" (these are just a way to keep track of our current strategy or goal).

Statements such as ?dist tell the pattern matcher that we want the production to fire if there is some value in that third slot, but that we don't particularly care what it is for the purposes of matching the condition. Notice that we also use these variables to adjust the goal state and we even are able to use these variables in our action (e.g., curr_dist in the action/RHS comes from ?curr_dist in the condition/LHS).

We also use an instantiation of the MotorModule (and it state) attributes of the body representation to specify additional constraints for the selection of this rule by the central production system.s

Note that ACT-R has other cool features/theory that we don't get into to keep things simple for now. I'm hopefull that we'll be able to make more principled Cognitive Models towards the end of the semester, but this will largely depend on the ebbs and flows of the course.

Using ACT-R would give us access to the symbolic (e.g., rules and facts) and subsymbolic (utility and activation) memory representations. The subsymbolic systems implement forms of bayesian and reinforcement learning.

Instructions for submission

We're going to use Gitlab for our general work to make things easy for me to see progress. This also makes transition for your code to another repo (e.g., Github) fairly easy should you want to show-off Project work.

You will still submit final files on Moodle (this makes grading a bit more straight forward)

Create a new repo on Gitlab.Bucknell.Edu called CSCI379-FA18

- Make sure to give me access to the repo so that I can see your work!

Create your own folder for this assignment called PyACTR-RBES

- Follow the assignment creation steps for other assignments that involve programming as well unless otherwise specified

- By using Gitlab, I can also pull your code and try it out remotely if we run into an impasse and dealing with a deadline

Grading

This assignment has some fairly straightforward grading We can think about this assignment as being out of 5 points

- 4 points: Have your agent do a swirl pattern strategy (like the Roomba alg we're trying to mimic) and OK documentation

- Documentation here includes a README

- 1 point (some partial credit is implicit):

- Good Documentation

- Full implementation of the Roomba alg (implementing the wall tracing and straight random strategies mentioned in the linked article)

- creativity (e.g., trying other algorithms that work in concert with the swirl strategy)

Document your code

Document your code

Document your code

Document your code

References

Dancy, C. L., Ritter, F. E., Berry, K. A., & Klein, L. C. (2015). Using a cognitive architecture with a physiological substrate to represent effects of a psychological stressor on cognition. Computational and Mathematical Organization Theory, 21(1), 90-114.