- Prof. Dancy's Site - Course Site -

AI & Cognitive Science Model Cards Assignment

Final Submission Due (Area/Cohort 1): 04-March-2021 (3rd week) Final Submission Due (Area/Cohort 2): 23-April-2021

If you haven't done so already, Create a new repo on Gitlab called CSCI357-SP21

Partners required

Let's create our own first Model Cards!

Directions

Create a folder, AI-Soc, in the 357-SP21 git repo

What are Model Cards?

Well, I hope you're joking! (If not, go read the Mitchell, 2019 paper!)

What should we use to create our Model Cards?

Normally, you would develop a Model Card for your own computational learning model.

For this part of the assignment, we're going to simplify things a bit and use a particular model.

The model

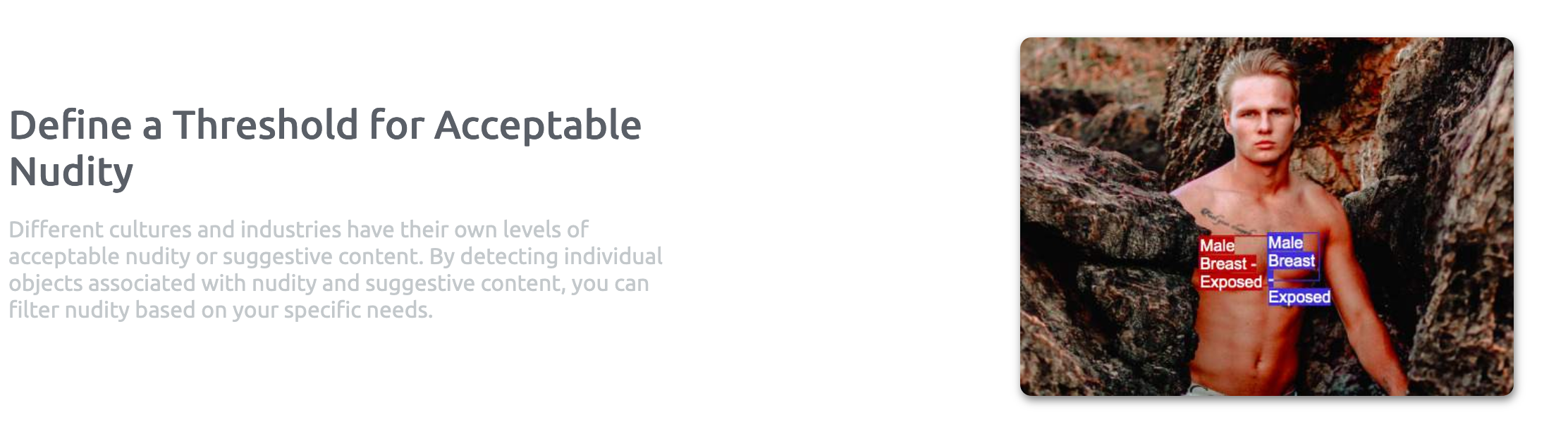

You're going to use the Open_NSFW model (DeepAI/Tensorflow version).

This was developed originally by Yahoo, then adapted by some folks to work in Tensorflow, then "enhanced".

The model outputs a "Not suitable for work" score. You're going to use these scores to determine erorr rates on two sets of images:

- The chicago face dataset (which you'll download from Moodle)

- A dataset of your choosing (find something to test it!)

We won't use the actual tensorflow model. This is because the original tensorflow model works with tensorflow 1.x (not the newest version of tensorflow). There are changes within the code that you can make to help this and (perhaps easier) you can also just use a specific version of tensorflow! If you want to try this out, I have no problems with that, but you can use their web api if you just want to do the bare minimum and don't want to mess around with tensorflow right now.

Let's get started

The API is actually pretty simple!

The majority of the work on your side will be organizing the data you want to use & making some python code so that you can call the API on those data! The code below (from [warning: some images on the actual site are not suitable for work] DeepAI) is the way you print out the results of running the model on one image

import requests

r = requests.post(

"https://api.deepai.org/api/nsfw-detector",

files={

'image': open('/path/to/your/file.jpg', 'rb'),

},

headers={'api-key': 'quickstart-QUdJIGlzIGNvbWluZy4uLi4K'}

)

print(r.json())

Stage 1 - Testing the API with the Chicago Face Dataset and selecting another one, Suggested target date to finish: 18-April

The first thing that you'll want to do is test the Model with the Chicago Face Dataset images.

- Create a simple function to find the images in the folders and get the NSFW score for each of those images

- Figure out how you want to capture those scores

- Test creating simple graphs that show the scores for various demographics in the dataset. You probably want to create a subset of the whole dataset for testing. No need to get the full results until you are ready to just plug everything into the model card!

- Search the interwebz for a another dataset that you think might be interesting to use to test this out.

Stage 2 - Testing the API with your new Dataset, Suggested target date to finish: 20-April

- Test your newly selected dataset with the code you developed for the Chicago Dataset.

- Examine your dataset, and see what results you get for some test images.

- Are there interesting ways to look at the results from those data? Interesting categories to break the dataset into?

Stage 3 - Get the dang thing done!

Well now it's time to pull everything together and see what you come up with! Make sure you pay attention to the Deliverables listed below. Hopefully your 2nd dataset gives you some interesting results! (The Chicago Face Dataset might too)

- Note that there are several ways to break the Chicago Face Dataset up into categories, use that to your advantage when you're trying to figure out how to present information on your model cards! (You not only have "Sex" and "Race", but also various "Emotion" facial expressions for some datasets)

Deliverables (what do you need to do to get a good grade)

There are several parts to this assignment:

- Create a folder called

AI-Socinside of yourCSCI357-SP21git repo (This is where you'll submit your work) - The code

- Submit all the code you create for this assignment. I expect the code to be commented well and readable

- I expect a README written in markdown that gives the detail of your code (how to use it, issues, etc.)

All non-code artifacts should be placed in a folder called

docsin yourNNfolder.

- The Model Cards

- This is a pretty important part! You should submit two Model cards (one for each dataset you test it on) for the model based on the examples given by Mitchell et al., 2019. If you find other nice examples, cite them! I will leave it up to you to decide all the metrics to include (and some of this will depend on your second dataset).

- We are doing two Model cards here because it will (hopefully) be a bit easier to parse meaning from the cards individually (it is, of course, very possible to do all of this in one card)

- ModelDiscussion.pdf

- Submit a PDF that provides a discussion and reflection of the model performance. Find any interesting things between the model and the datasets? Say something here! Find anything odd about the page that gives information on the detection algorithm/model? Say something here!

- I would expect this to be 1-2 pages. (But ask if you think you have more to say!)